Creating and managing topics

Kafka topics may be created in a self-service manner through Entur’s Kafka management tool at Entur Kafka Admin. By default, you will have access to create topics on behalf of your team, as well as to update topic configurations for the topics where your team is the topic-owner.

Topics

Kafka topics may be created in a self-service manner through Entur's Kafka management tool at Kafka Admin API. All Entur users have access to create and manage topics on behalf of their team, provided that their logged in auth0-user has a valid team-membership (and that this team has been granted access to the Kafka-platform).

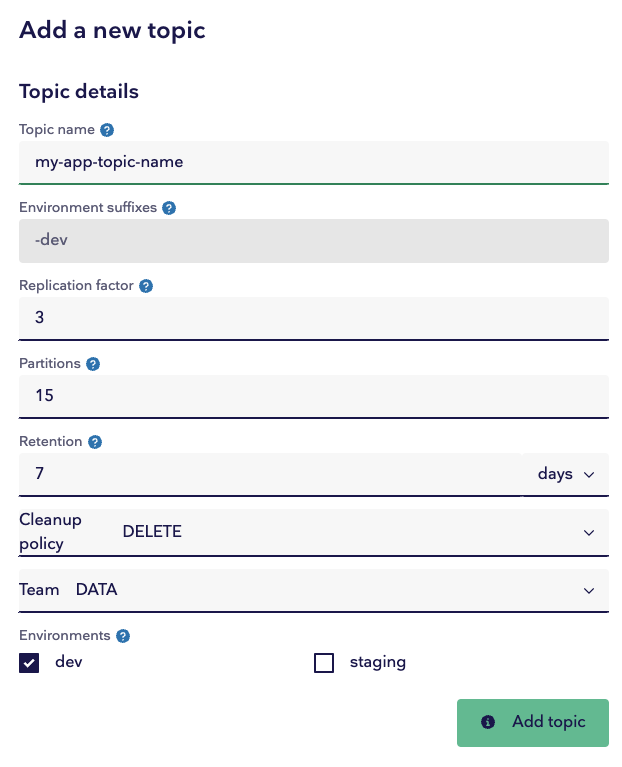

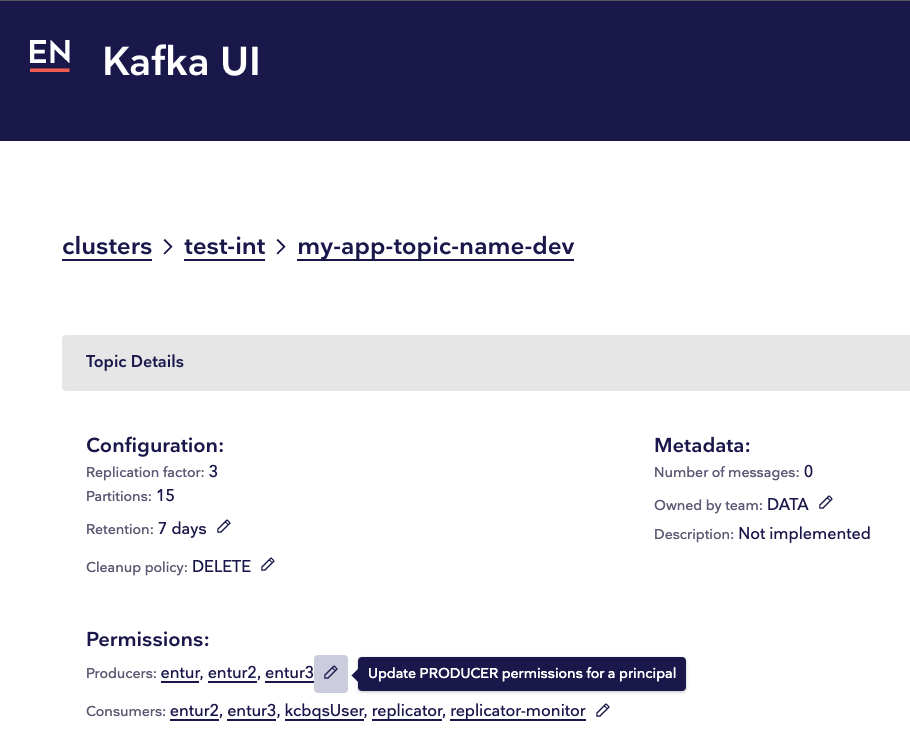

If you need access to create topics, you may do so by using the Kafka-admin-webapp "Add a new topic" section, as described below. After creating a topic, your team will then have ownership of this topic, such that your team may change the topic configuration at a later point, should you wish to do so. Once created, you may find the topic that you created by using the search-functionality on the top of the web page in the cluster where it was created. Clicking on the topic will take you to a page rendering the details for the topic.

Please note that you may delete topics only in the test-clusters, but not in the prod-clusters. For the latter case, please contact a data platform administrator. For Entur users, you may do so by reaching out in #talk-data.

Creating the topic

Name

Topic names should describe the data that will be published to the topic. Where appropriate, topic names should indicate which organization the data belongs to. An environment suffix will be automatically applied to your user defined topic name, e.g. app-some-topic-dev.

Replication factor

Replication factor indicates how many replicas of each partition should be distributed to distinct brokers. This is typically left at 3, but could be as high as the number of brokers in the cluster.

Partitions

The number of partitions the topic gets divided into. Partitions are the unit of parallelism in Kafka both in terms of reads and writes, which means a higher partition count increases throughput. For example, the number of simultaneous active consumers for any consumer group is bounded by the number of partitions.

While it is technically possible to increase partition count later on, this carries a number of challenges including losing ordering guarantees for messages. Instead, it is common practice to over-partition somewhat in order to be more future-proof.

Increasing partitions does carry a tradeoff, including using more open file handles and potentially increasing end-to-end latency (very) slightly.

Retention

Retention defines the time to live for individual messages. This property works in tandem with the cleanup policy.

Cleanup policy

Cleanup policy determines how to handle cleaning up messages that have passed their retention duration. There are two options:

DELETEsimply deletes messages older than the retention period.COMPACTwill delete messages older than the retention period, so long as a message with the same key and a higher offset (i.e. newer offset) exists. This policy is useful if you need to retain the latest version of a domain object.- This should not be used for events with all-unique keys (such as topics with data that do not represent persistent domain objects), as it will lead to infinite retention of all messages.

- If you want to store data long term, the correct course of action is to set up a pipeline (using for instance Kafka Connect) to transfer your data to BigQuery using a sink.

- Also note that attempting to produce a message with a null key to a log compacted topic will result in an exception.

- This should not be used for events with all-unique keys (such as topics with data that do not represent persistent domain objects), as it will lead to infinite retention of all messages.

Team

The team field should be set to the team to which your topic belongs. This dropdown may not be visible to you if you do not have access to create topics for multiple teams, in which case it will automatically choose the team to which you belong. This is not a Kafka property, but rather an admin tool and documentation feature. It does not affect the topic itself in any way.

Environments

Some clusters serve multiple environments. When creating topics, it is often desired to create identical setups for both dev and staging. If you only wish to create topics for one or the other, simply deselect the environments you do not want topics created for. Note that an environment suffix equal to the environment name will be appended to your final topic name(s).

Entur Kafka Clusters

Entur maintains four distinct Kafka clusters (in addition to an unstable internal development cluster). For each environment (test or production), there exists an internal and external Kafka cluster.

Internal clusters are for unfiltered data, and only internal Entur-applications are granted access.

External clusters are for data ready for consumption by external organisations. Such data is typically (but not always) filtered into separate topics per organisation, with only the owning organisation being granted access to consume each topic.

Topic permissions

After locating a topic for which you would like to change permissions, such as https://entur-data-kafka-admin.entur.org/clusters/entur-clusters/test-int/my-app-topic-name-dev, you may view which Kafka users that have producer and/or consumer permissions right under the "Permissions:"-section in the web app.

By clicking on the edit-icon next to the list of producers or consumers, you may then either allow or deny a specific Kafka user access to your topic. In other words, this is where you may grant access to your application's Kafka user(s) to consume and/or produce messages for this topic. Happy streaming! 🎉